From the “better late than never department”…

With nearly the whole world under one form or another of stay-at-home orders in April, anyone who could turned to the Internet to work, learn, relax, or maintain social connections. The increased traffic raised concerns about whether the Internet would catastrophically fail, but the good news is that the Internet did not catastrophically fail, in large part due to its network-of-networks architecture. That architecture meant that the disruptions that did occur were, for the most part, limited in time and scope.

In April, the notable Internet disruptions reviewed below were caused by cable/fiber issues and network problems, as well as one where the cause was unknown.

Cable/Fiber Issues

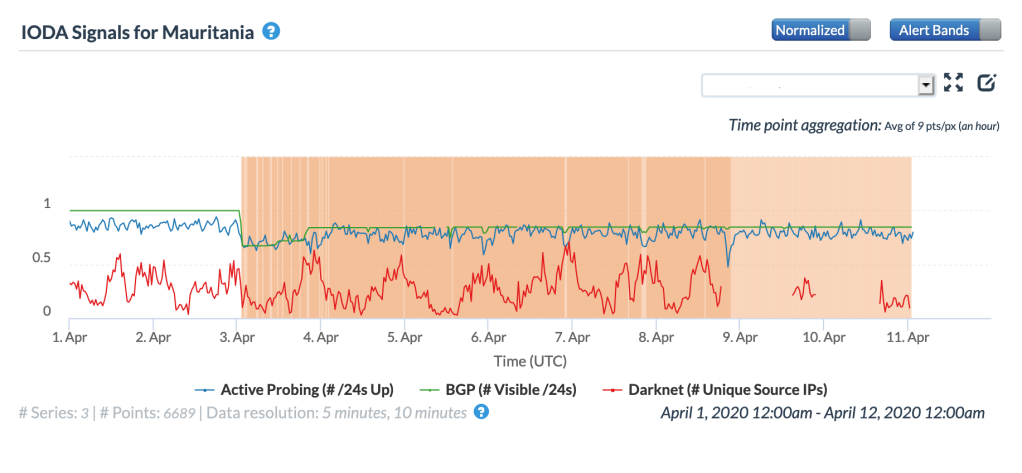

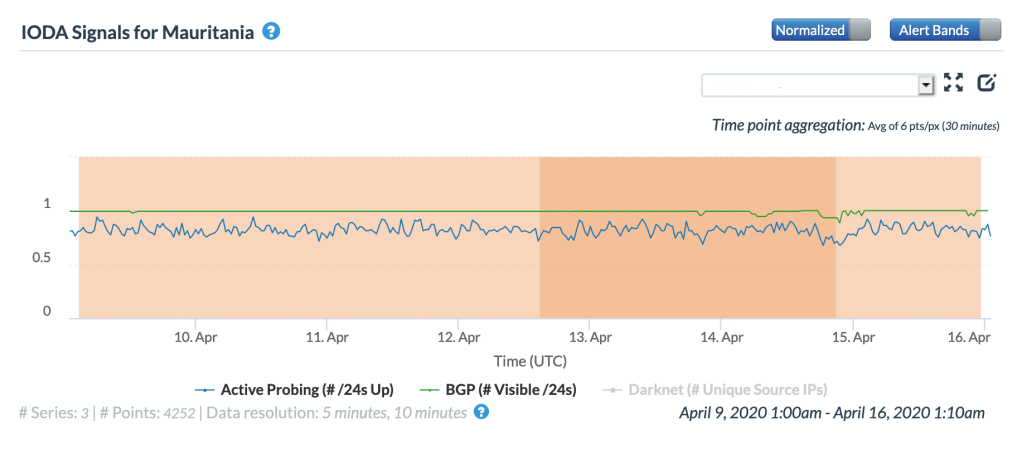

New problems with the ACE Submarine Cable caused nearly two weeks of Internet disruptions in Mauritania, starting on April 3. As seen in both the Oracle Internet Intelligence and CAIDA IODA graphs below, the cable problems had a nominal initial impact on the active probing metrics, with several additional brief drops seen through the period shown. There was also some impact seen in the BGP metric, though it appears more visible in the CAIDA data than in the Oracle data.

Oracle Internet Intelligence Country Statistics graph for Mauritania, April 4-10

Oracle Internet Intelligence Country Statistics graph for Mauritania, April 10-16

CAIDA IODA graph for Mauritania, April 3-10

CAIDA IODA graph for Mauritania, April 10-16

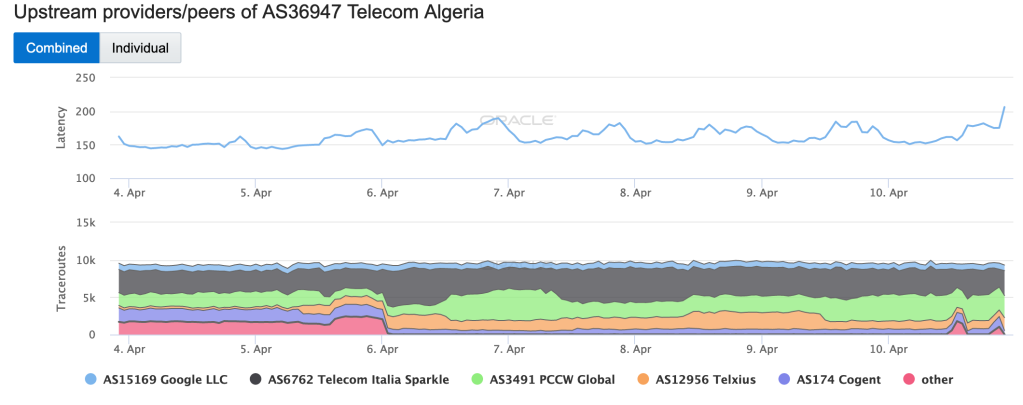

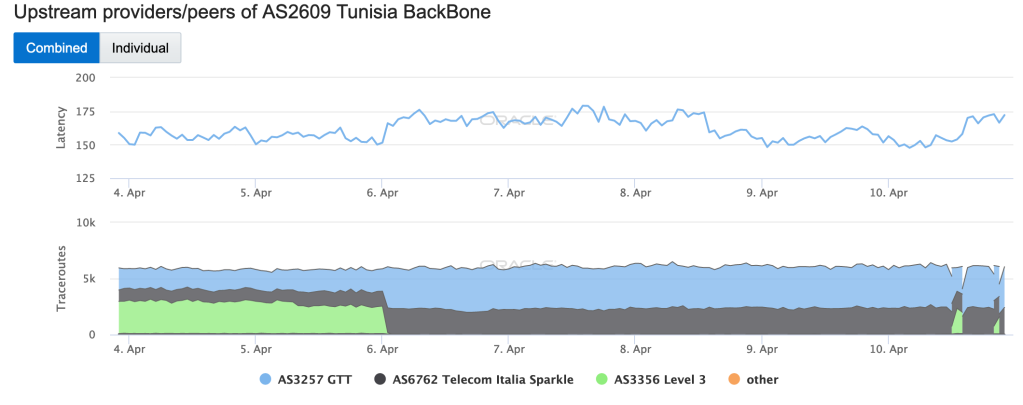

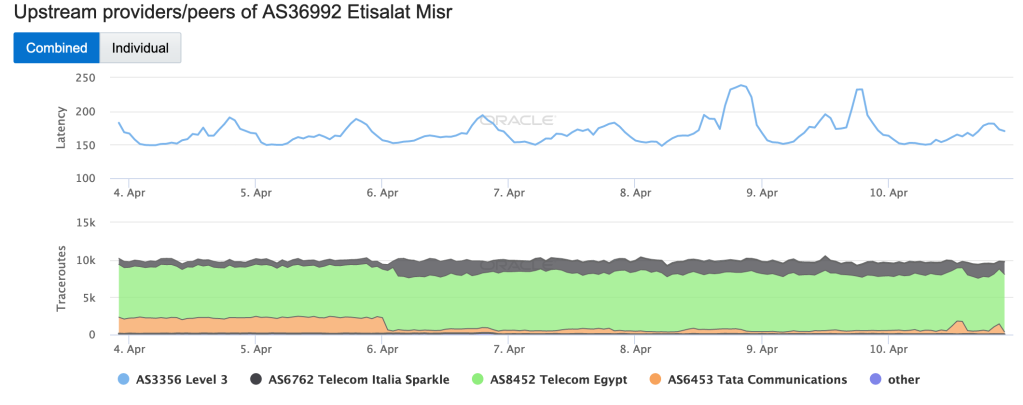

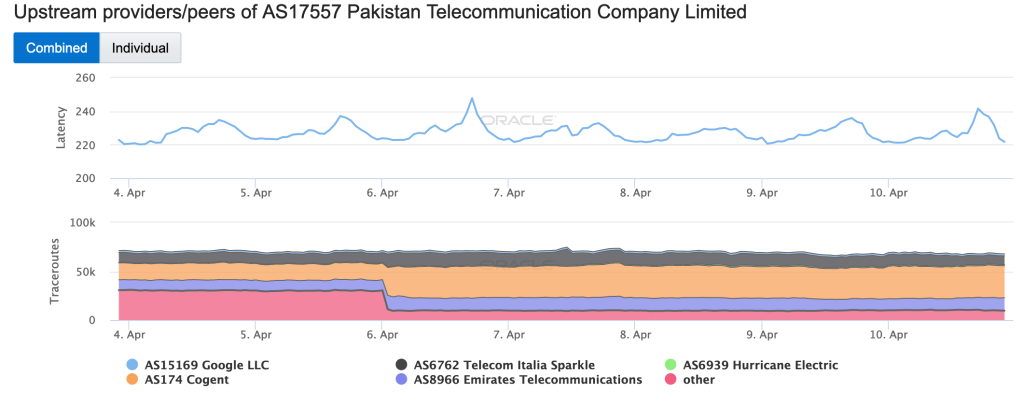

On April 6, a submarine cable repair ship was observed departing France with repair supplies, heading towards what was subsequently identified as the SEA-ME-WE-4 (SMW-4) submarine cable. Oracle Internet Intelligence noted that it had observed “the loss of numerous transit connections … consistent with the loss of a submarine cable in countries including Algeria, Tunisia, Egypt, Saudi Arabia, and Pakistan just after 01:00 UTC that day. The graphs below show upstream providers shifting for networks in each of these countries very early (UTC) on April 6. The SMW-4 cable has landing points in each of these countries, among others.

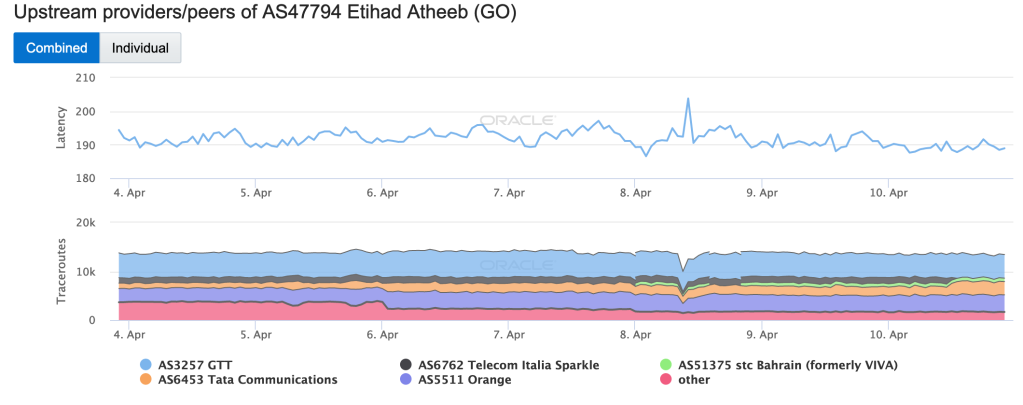

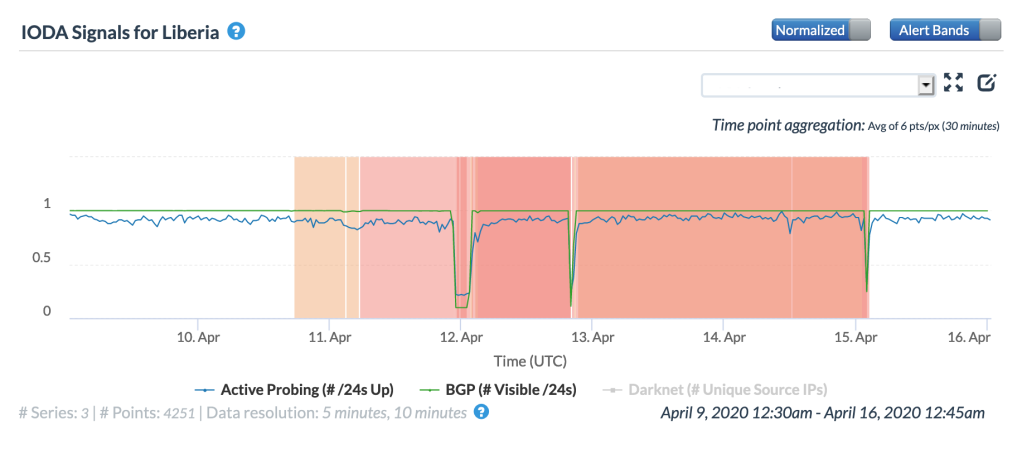

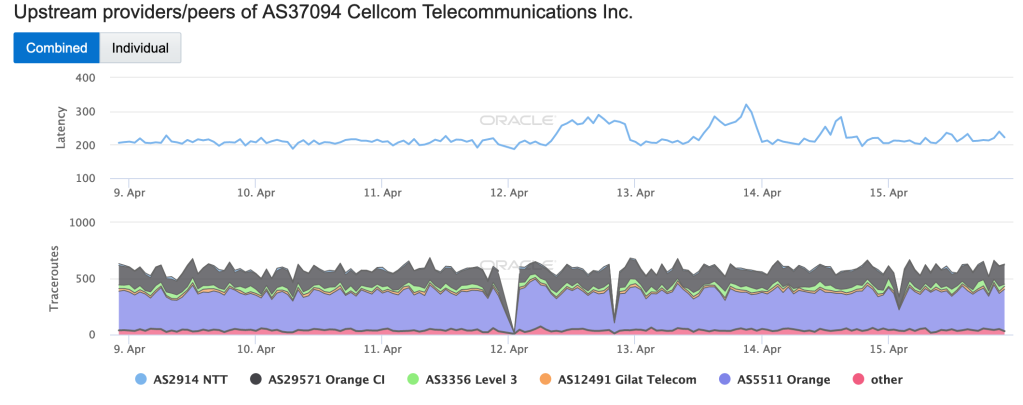

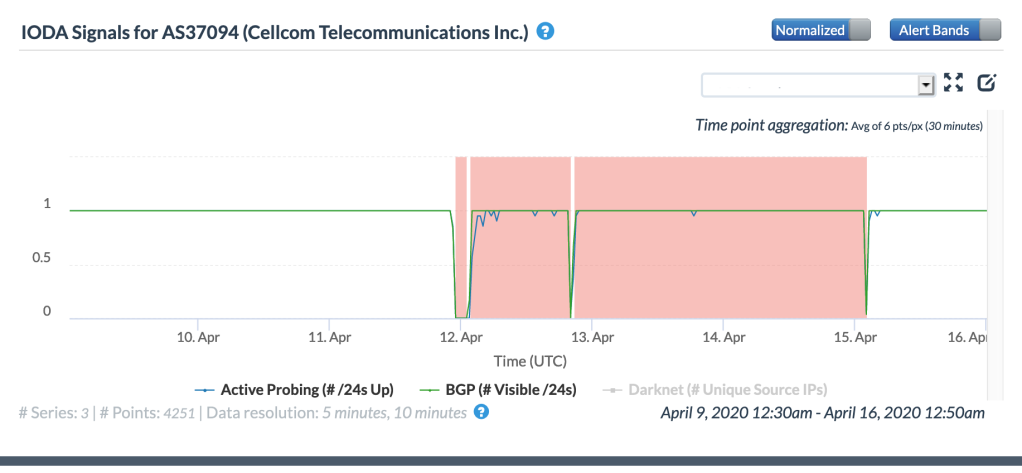

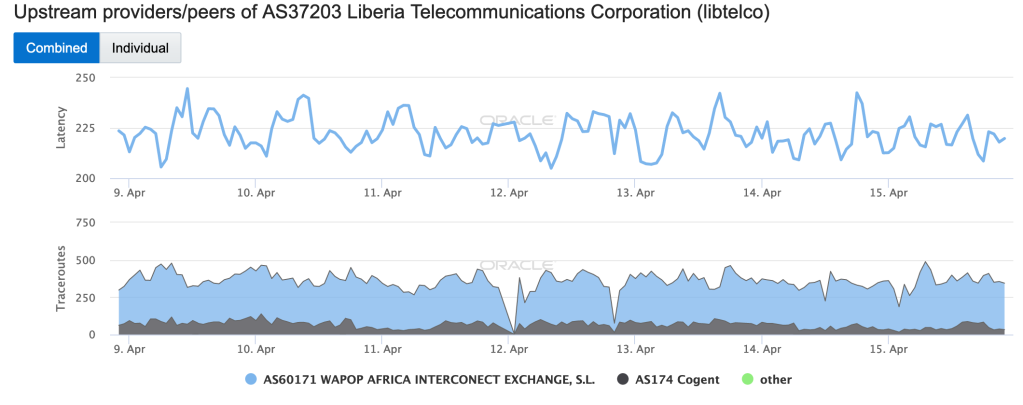

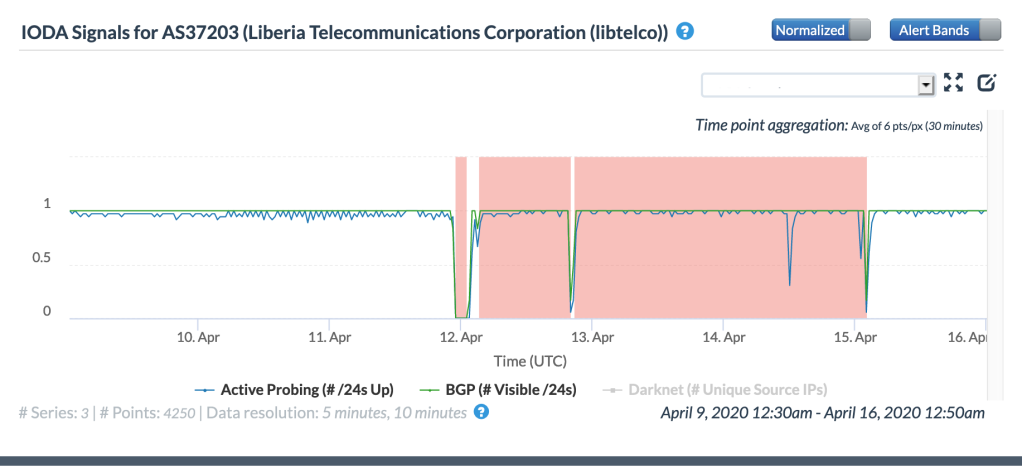

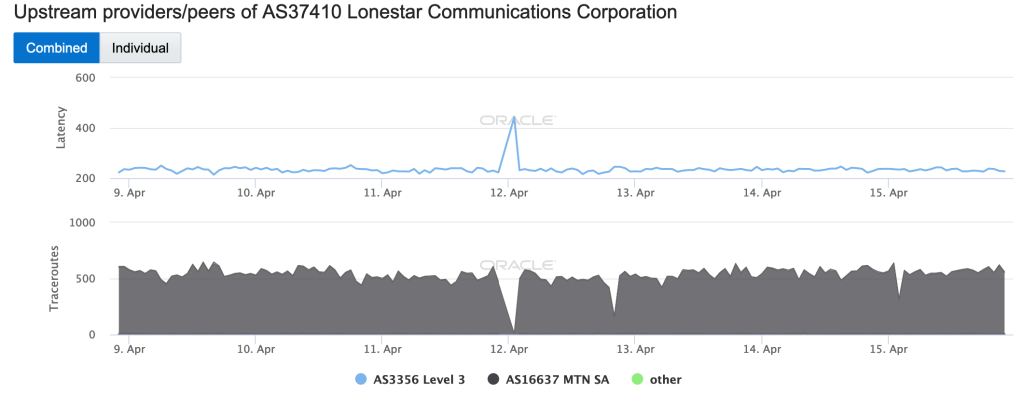

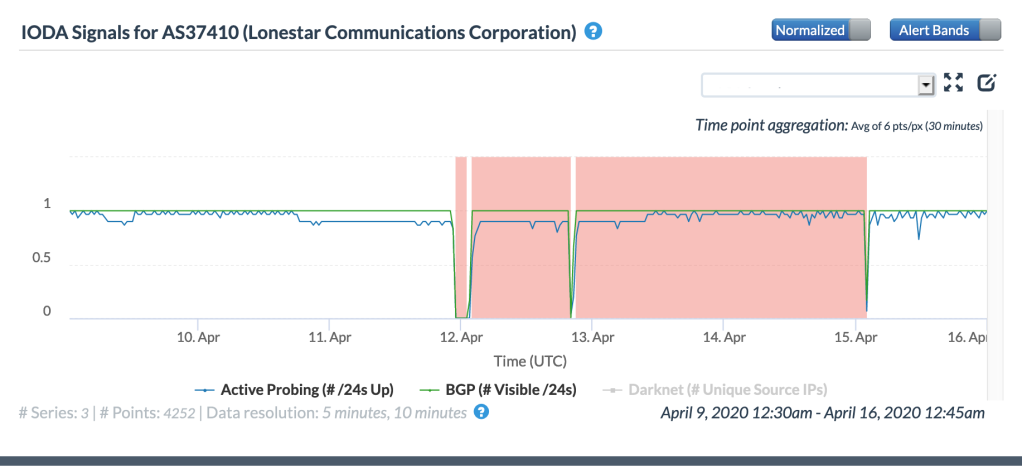

On April 11, Moses Karanja noted on Twitter that Liberia seemed to be having connectivity issues. It was subsequently confirmed that the connectivity issues observed that day were related to issues with the ACE Submarine Cable. The graphs below show the resulting Internet disruptions in Liberia late in the day (UTC) on April 11, at both a country and network level, with impacts seen in both the active probing and BGP metrics. Disruptions also occurred on April 12 & 15, as seen in the graphs. While unconfirmed, these are likely related to issues with the cable as well.

Oracle Internet Intelligence Country Statistics graph for Liberia, April 11-15

CAIDA IODA graph for Liberia, April 11-15

Oracle Internet Intelligence Traffic Shifts graph for AS37094 (Cellcom Telecommunications)

CAIDA IODA graph for AS37094 (Cellcom Telecommunications)

Oracle Internet Intelligence Traffic Shifts graph for AS37293 (Libtelco)

CAIDA IODA graph for AS37293 (Libtelco)

Oracle Internet Intelligence Traffic Shifts graph for AS 37410 (Lonestar Communications Corporation)

CAIDA IODA graph forAS 37410 (Lonestar Communications Corporation)

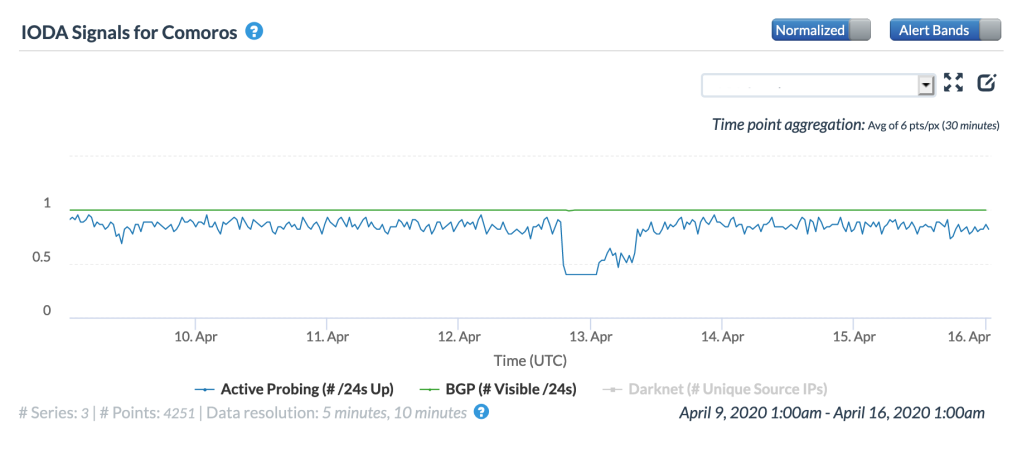

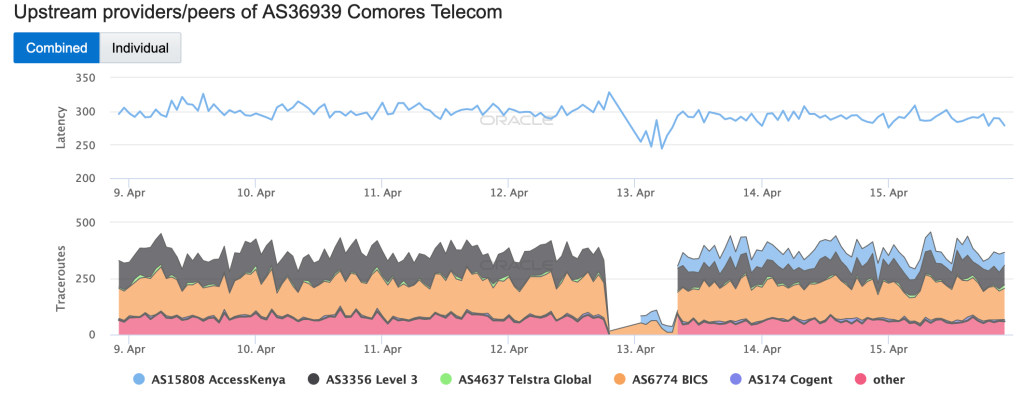

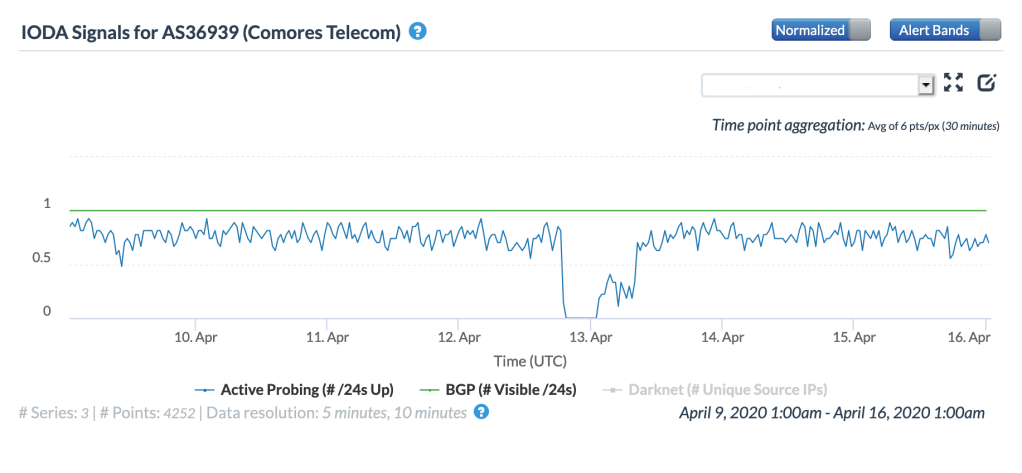

Starting around 17:00 GMT on April 12, an Internet disruption was observed in Comoros, impacting the active probing, BGP, and traffic metrics, as seen in the Oracle Internet Intelligence and CAIDA IODA graphs below. The disruption was largely mitigated after about 12 hours, with measurements returning to near-normal levels roughly six hours later. Comores Télécom posted an update to its Facebook page on April 13 stating (via Facebook’s translation) “Comoros Telecom informs its kind customers that the internet connection is restored. We renew our apologies for the inconvenience. Thank you for your loyalty.” While Comores Télécom didn’t specify what the root cause of the disruption was, the scope of the impact suggests that it could have been related to problems with the Eastern Africa Submarine System (EASSy) cable.

Oracle Internet Intelligence Country Statistics graph for Comoros, April 12-13

CAIDA IODA graph for Comoros, April 12-13

Oracle Internet Intelligence Traffic Shifts graph for AS36939 (Comores Télécom), April 12-13

CAIDA IODA graph for AS36939 (Comores Télécom), April 12-13

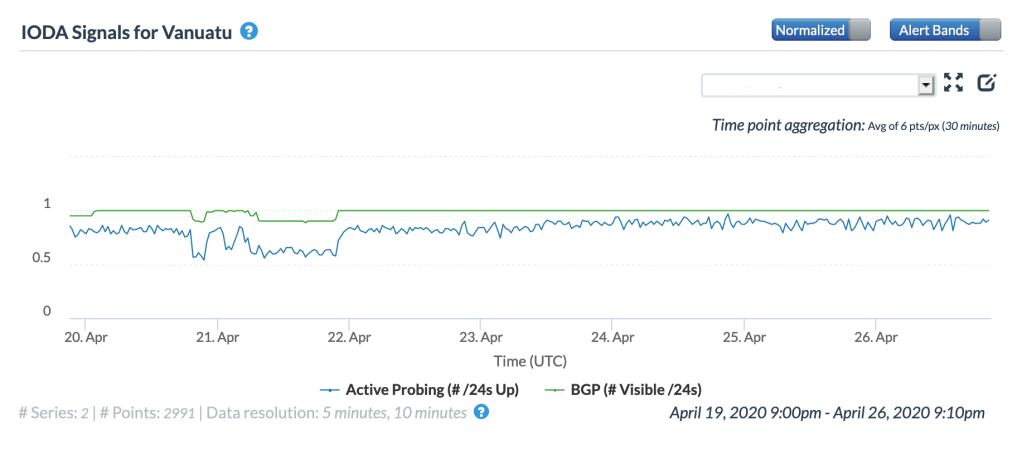

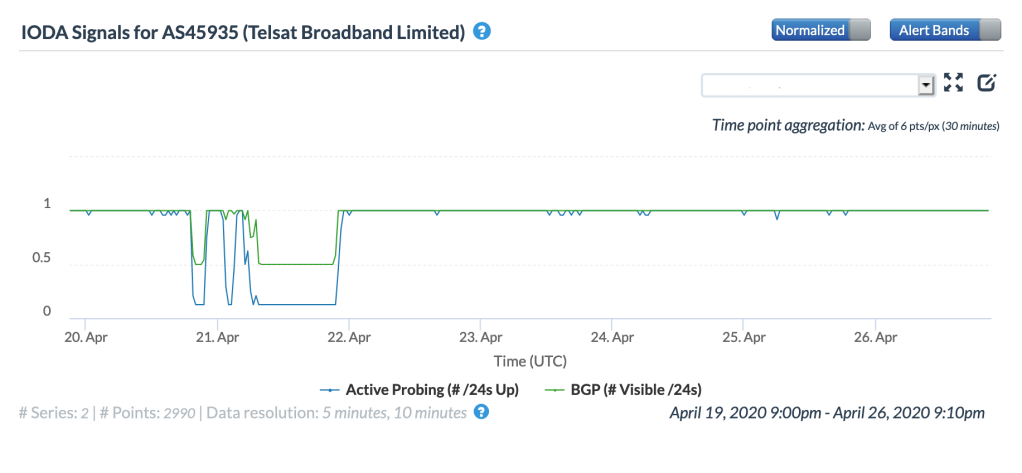

According to the Facebook post below from Telsat Broadband Vanuatu, connectivity issues experienced on April 20 & 21 were due to a problematic switch at a cable landing station.

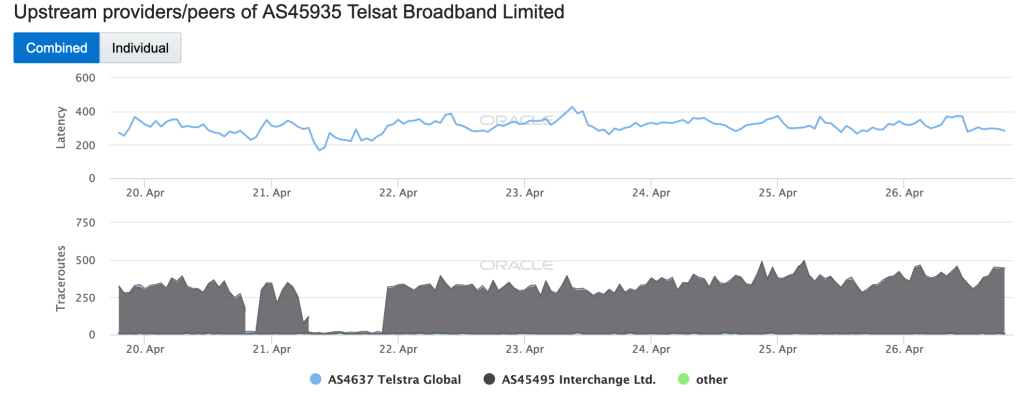

Interchange Cable Networks 1 & 2 (ICN1 & ICN2) both have landing stations on Vanuatu – both land in Port Vila, and ICN2 also lands in Luganville. Unfortunately, it wasn’t specified which of the landing stations the problematic switch was in. As seen in the graphs below, the disruption was nominal at a country level, but quite significant at a network level.

Oracle Internet Intelligence Country Statistics graph for Vanuatu, April 20-21

CAIDA IODA graph for Vanuatu, April 20-21

Oracle Internet Intelligence Traffic Shifts graph for AS45935 (Telsat Broadband Limited), April 20-21

CAIDA IODA graph for AS45935 (Telsat Broadband Limited), April 20-21

In the Tweet below from April 21, CenturyLink alerted customers of Internet service disruptions due to a fiber cut. While they didn’t provide details about the cause or location of the cut, subsequent Tweets from other users indicated that it was reportedly due to construction excavation, and that it occurred between Milwaukee, WI and Zion, IL.

Our CDN, IP and Transport services are experiencing service disruptions affecting some of our customers due to a fiber cut. Ensuring the reliability of our services is our top priority. We are working to minimize customer impact and will provide regular updates on our progress.

— CenturyLinkHelp Team (@CenturyLinkHelp) April 21, 2020

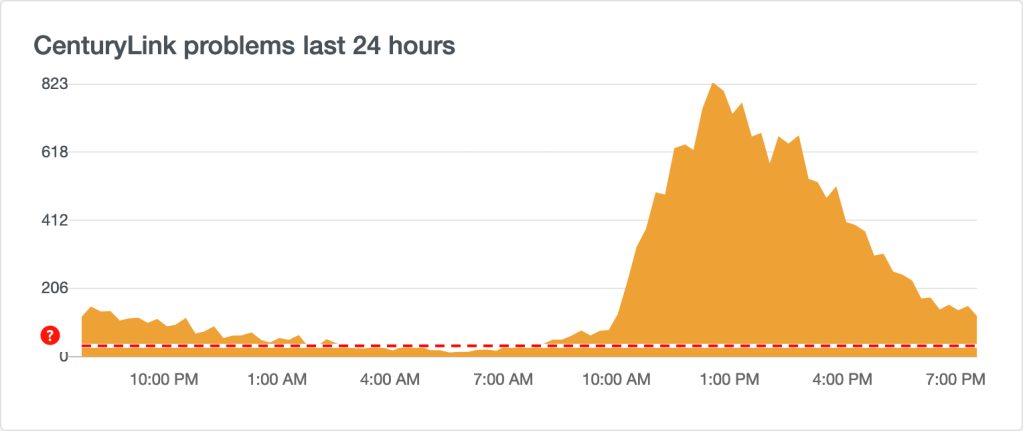

DownDetector, a site that tracks reported problems for major Internet service provider, applications, and Web sites, saw a sharp increase in reported issues for CenturyLink starting around 10:00 Eastern Time, as illustrated in the graph below.

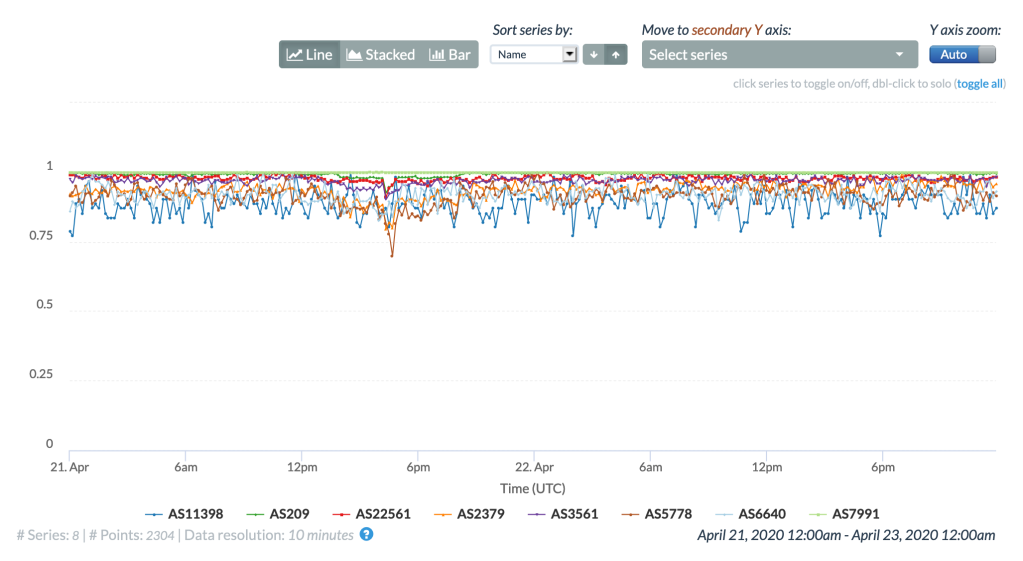

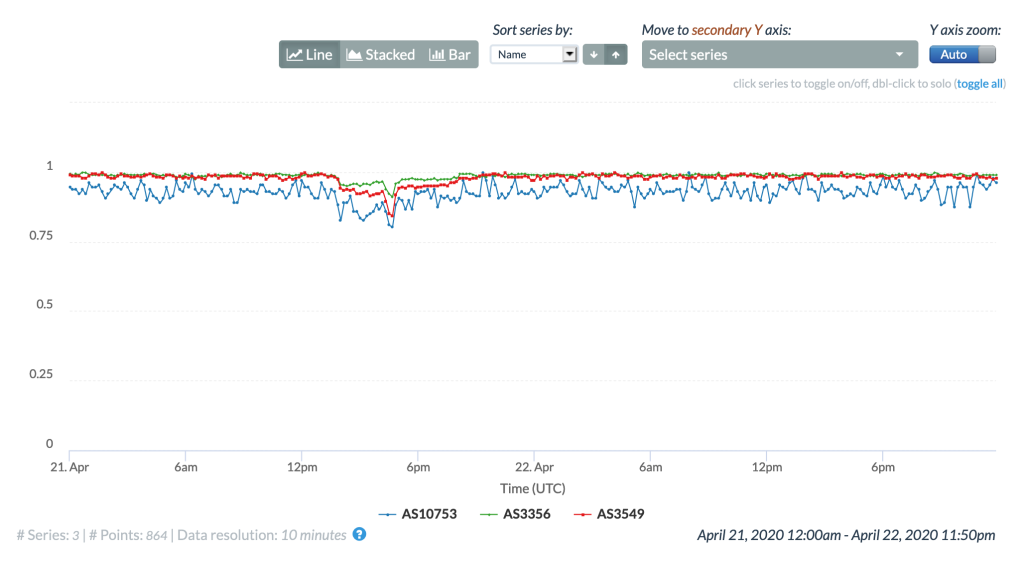

CenturyLink owns quite a few autonomous systems, in large part due to the acquisition of competing network providers over the years, including Level 3 in 2017. The top graph below from CAIDA IODA shows the impact to active probing measurements across autonomous systems registered to CenturyLink; the bottom graph shows the same measurements for autonomous systems registered to Level 3. While there is some degradation visible in the CenturyLink graph, the impact is more evident in the Level 3 graph.

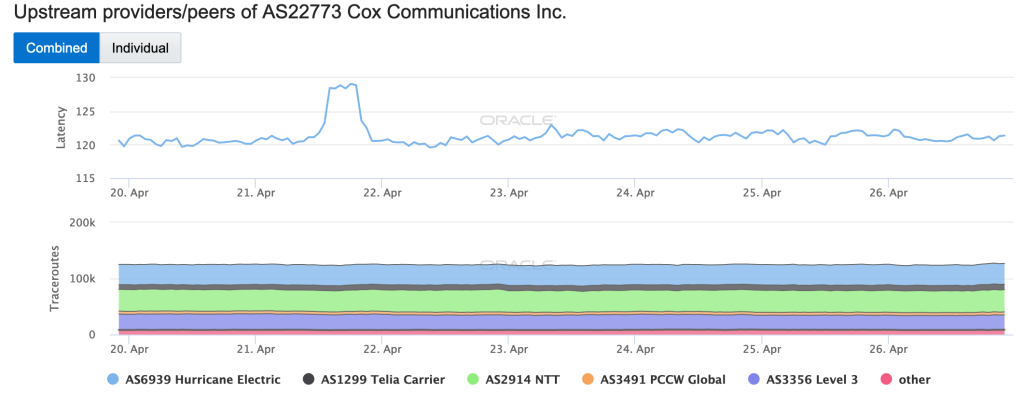

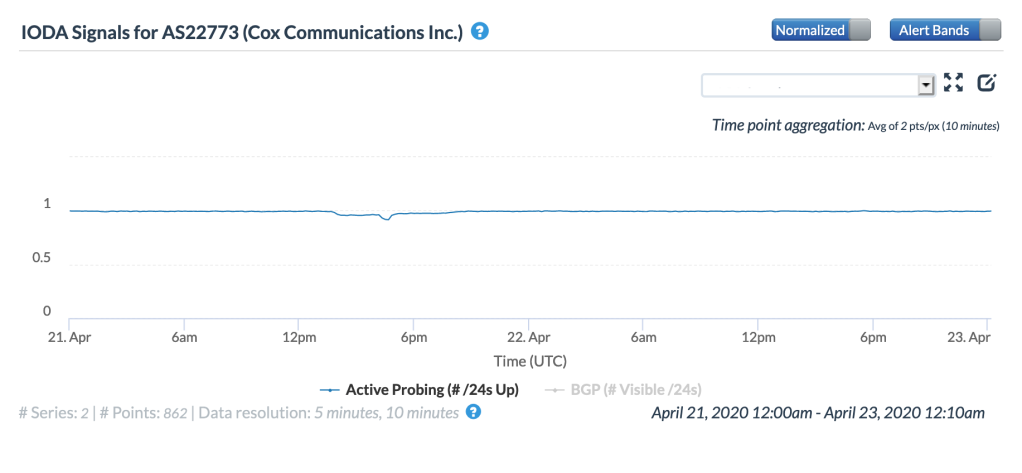

Last-mile provider Cox Communications relies on Level 3 (AS3356) as one of its upstream providers, and the graphs below show the impact of this fiber cut on Internet connectivity for the Cox network. The Oracle Internet Intelligence graph shows a significant increase in latency for traceroutes to endpoints in Cox, while the CAIDA IODA graph shows a slight drop in the active probing metric.

Oracle Internet Intelligence Traffic Shifts graph for AS22773 (Cox Communications)

CAIDA IODA graph for AS22773 (Cox Communications)

Network Issues

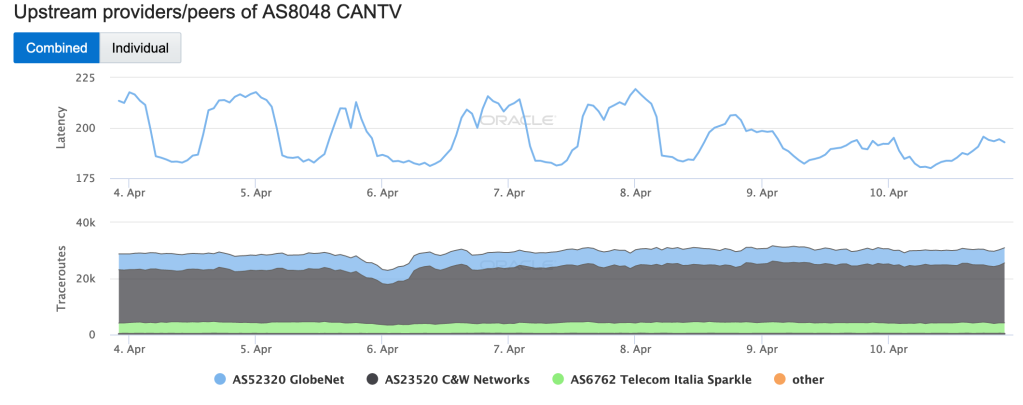

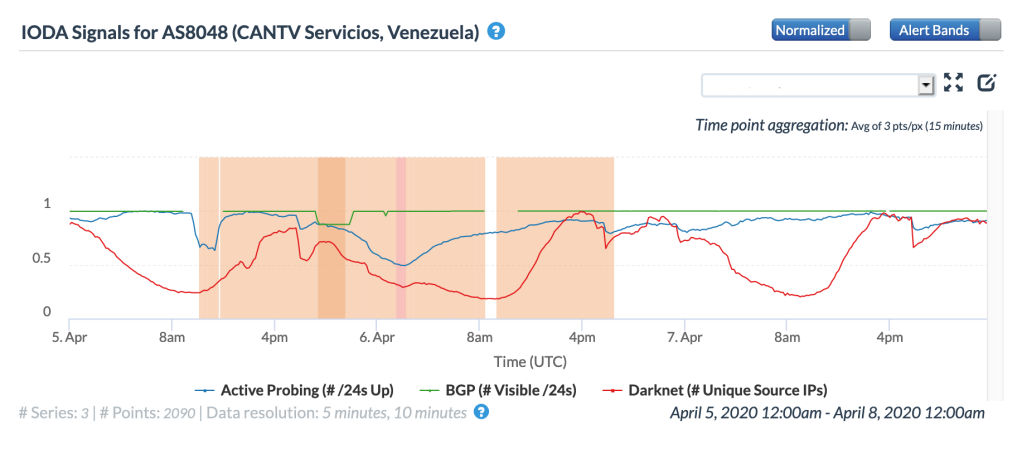

Internet connectivity for customers of state-run operator ABA CANTV in Venezuela was disrupted on April 5 due to a reported fire at the company’s offices in Chacao, Caracas, according to a Netblocks Tweet. The impact of the fire is evident in both of the graphs below, as it caused a drop in the active probing metrics starting the evening of April 5, lasting into the morning of April 6. CANTV’s official Twitter account (@salaprensacantv) confirmed the fire, noting that it was caused by the “power backups”, and posted several additional updates as repairs were made and connectivity restored.

Oracle Internet Intelligence Traffic Shifts graph for AS8048 (CANTV), April 5-6

CAIDA IODA graph for AS8048 (CANTV), April 5-6

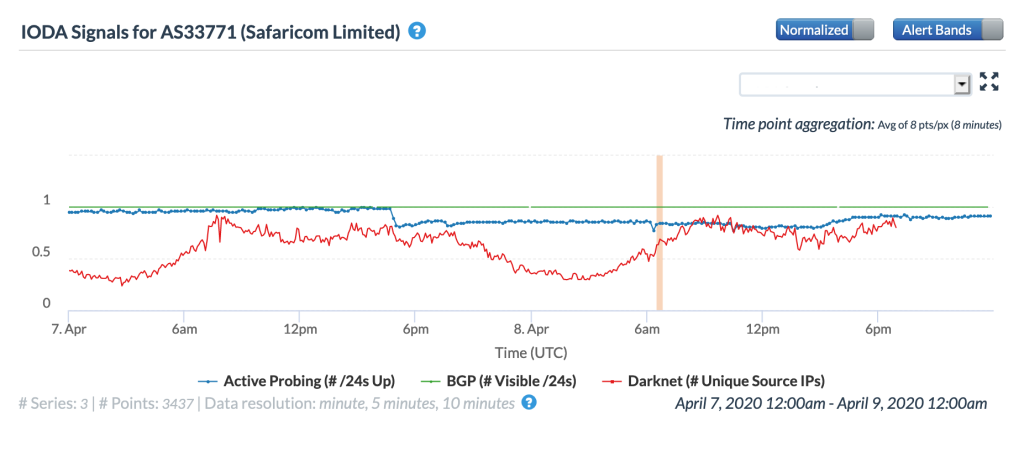

On April 7, Kenyan Internet provider Safaricom Tweeted about a service outage that was impacting a set of their “Home Fibre” customers.

We are currently experiencing a service outage affecting a section of Home Fibre customers.

— Safaricom PLC (@SafaricomPLC) April 7, 2020

Our engineers are working to restore services as soon as possible and we are sorry for any inconvenience caused.

The CAIDA IODA graph below shows that the outage began approximately 16:45 GMT, with partial service restoration occurring roughly 24 hours later. The impact is most evident in the Active Probing metric, although a nominal impact to the Darknet metric can also be seen at the same time.

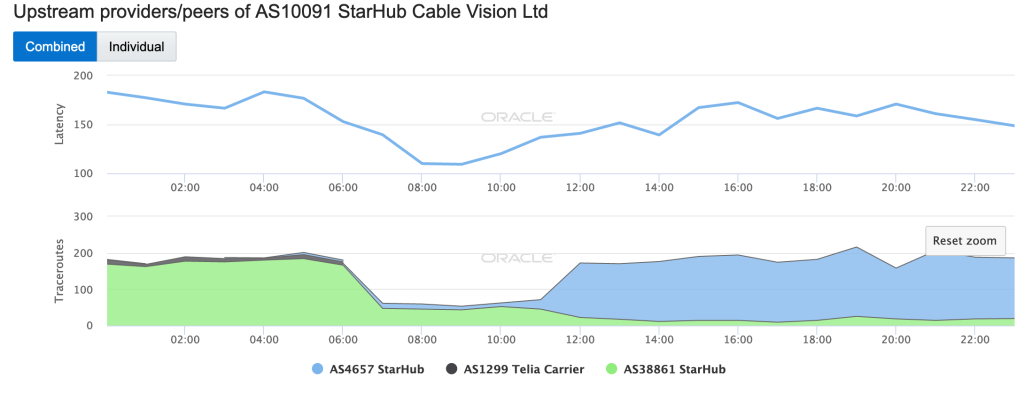

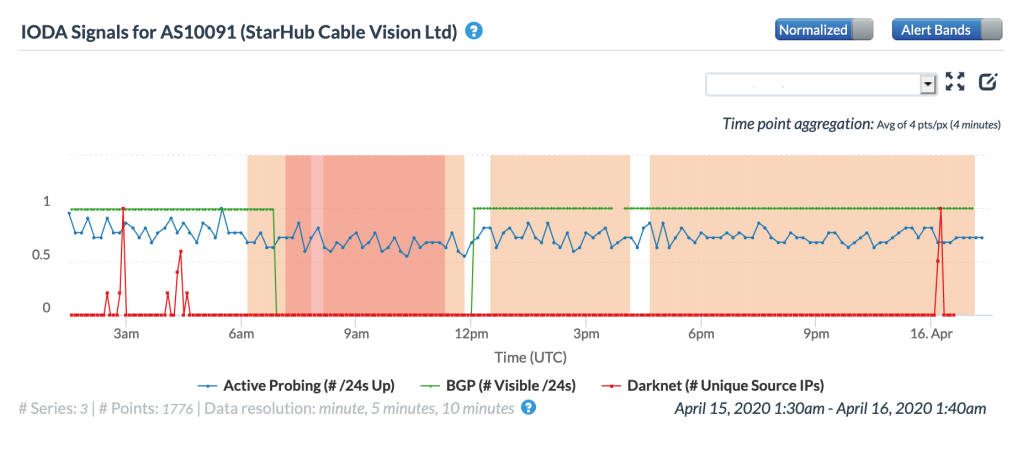

Published reports indicated that a multi-hour Internet disruption for customers of StarHub in Singapore caused problems for fiber broadband customers working and studying at home due to the coronavirus pandemic. The disruption can be seen in the graphs below, although the impact differs. In the Oracle graph, which displays the results of active probing tests, there is a clear decline in the number of successful tests to endpoints in this StarHub ASN transiting an upstream StarHub ASN. However, in the CAIDA graph, the Active Probing metric, although somewhat noisy, doesn’t appear see a material change. However, from a BGP perspective, the ASN effectively disappears from the Internet for the duration of the disruption.

Oracle Internet Intelligence Traffic Shifts graph for AS10091 (StarHub), April 15

CAIDA IODA graph for AS10091 (StarHub), April 15

A post to StarHub’s Facebook page provided updates as the situation progressed and gave the explanation below for the disruption. The company also offered affected customers a 20% rebate of their monthly fees as an apology for the service interruption.

“Traffic on our network is well below our available capacity and ample redundancy has been built into our network to cater for high service levels to be delivered consistently. The disruption arose due to a network issue with one of our Domain Name Servers that handles internet traffic routing, which has since been rectified.“

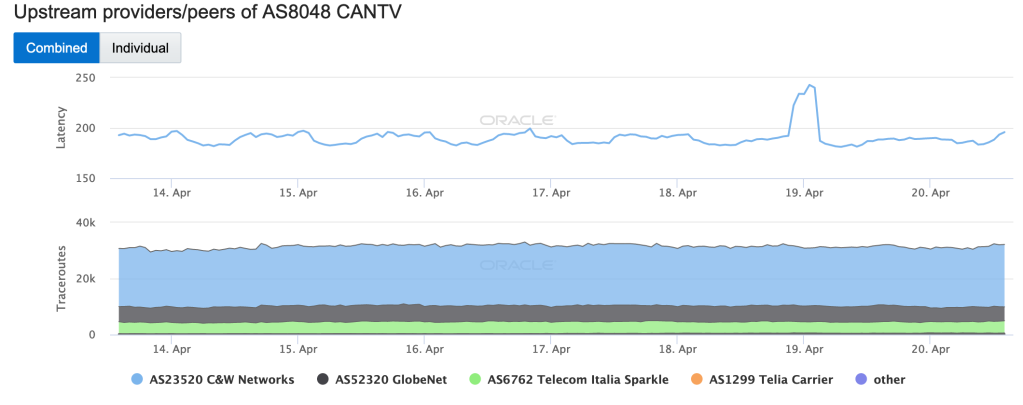

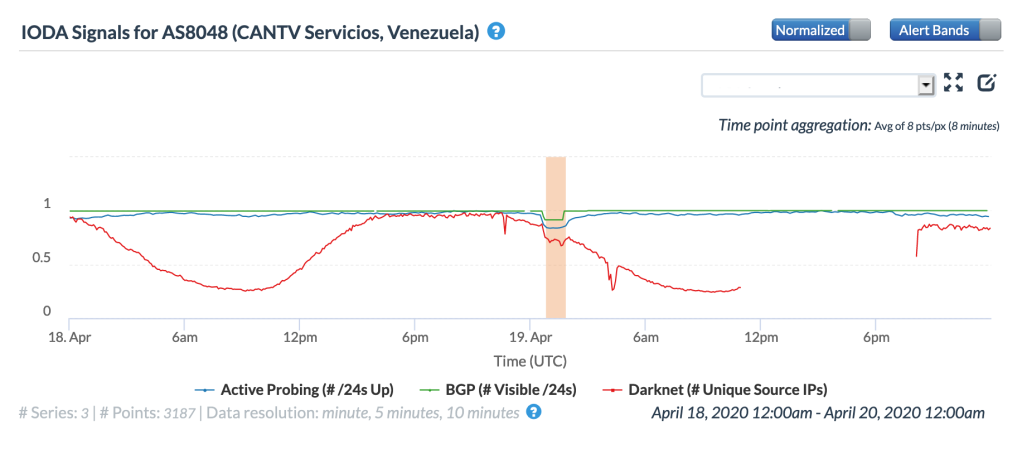

In addition to the disruption it Tweeted about on April 5, Netblocks observed a second Internet outage in Venezuela at state-run operator ABA CANTV on April 18. According to Netblocks, the issue began at 20:40 local time (00:40 GMT). The Oracle graph below shows increased latency for traceroutes to endpoints in CANTV starting just before midnight – this may be indicative of problems that led to the eventual failure shortly thereafter. The failure is more evident in the CAIDA graph, which shows declines in all three metrics occurring just after midnight GMT.

Oracle Internet Intelligence Traffic Shifts graph for AS8048 (CANTV), April 18

CAIDA IODA graph for AS8048 (CANTV), April 18

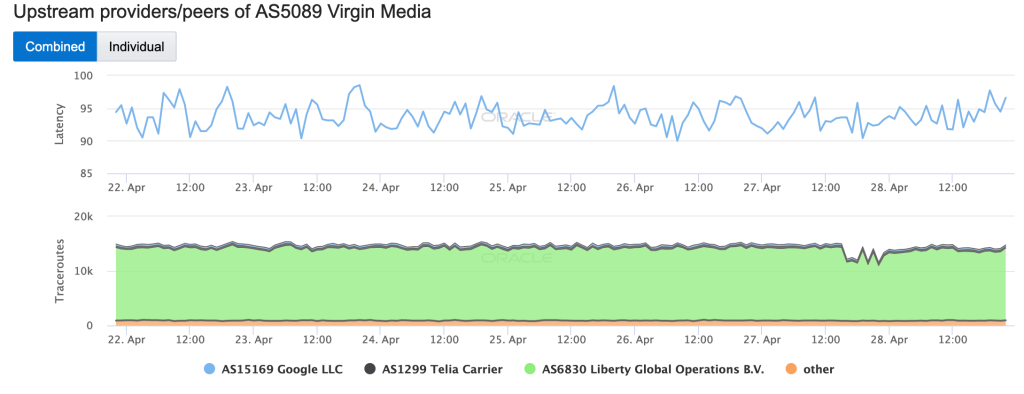

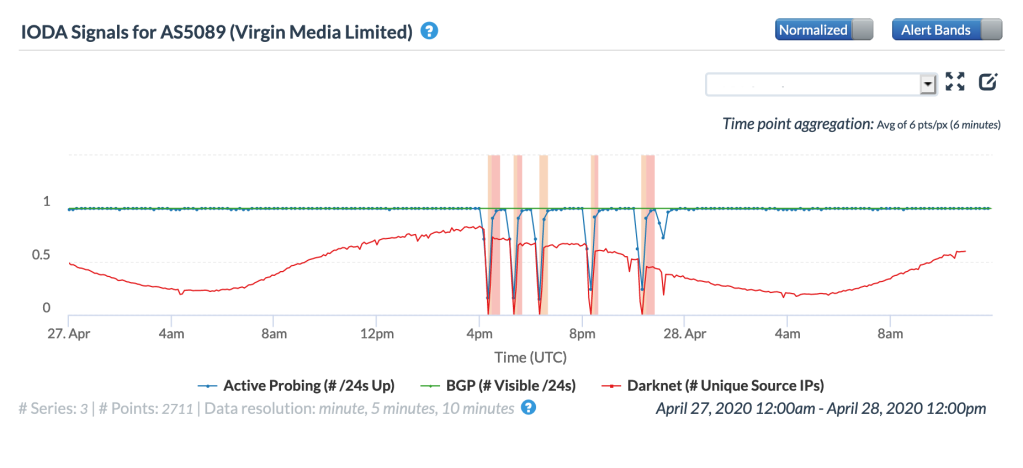

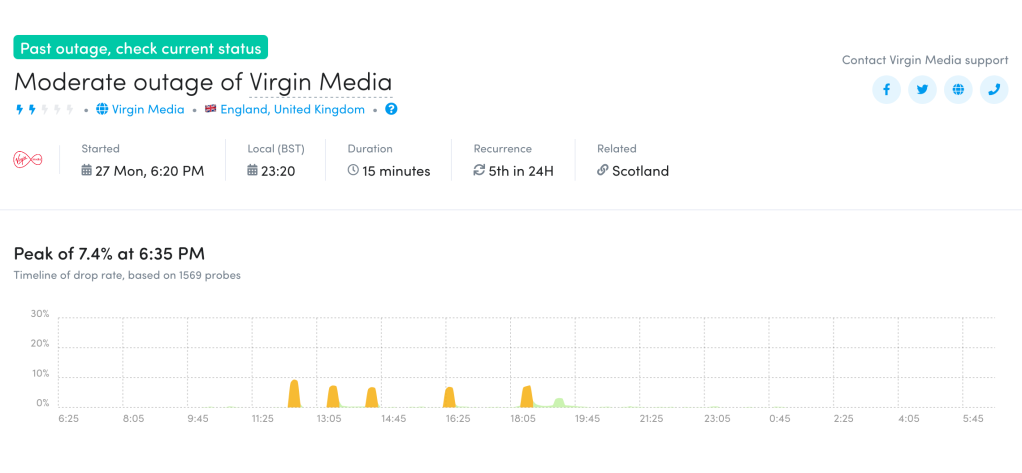

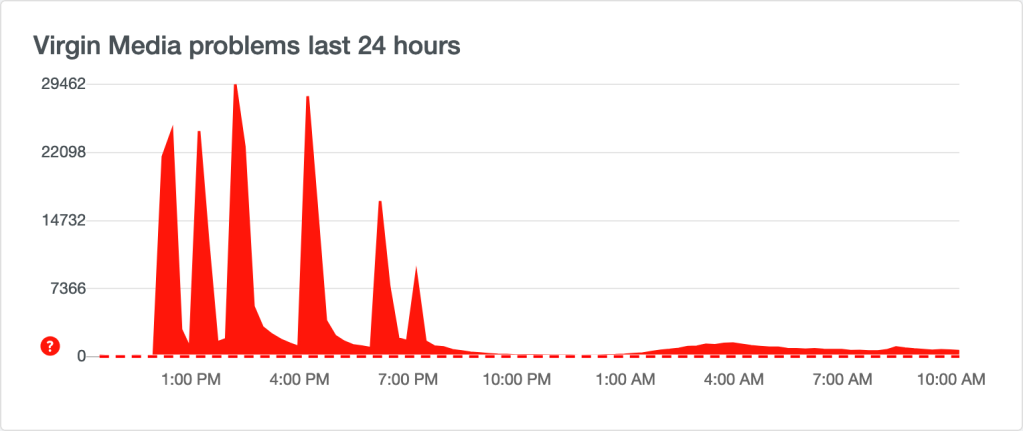

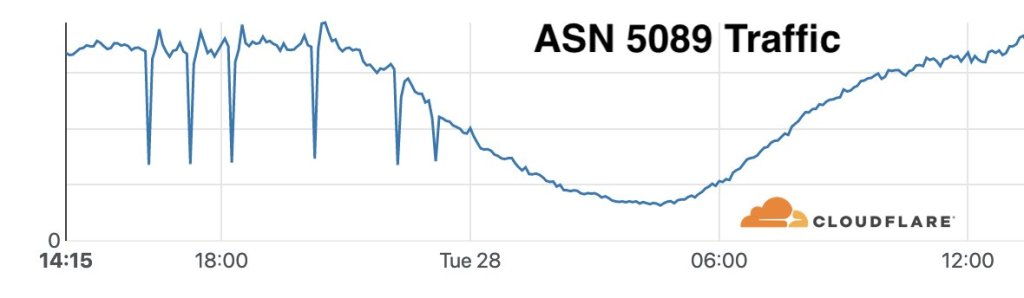

Customers of a number of European Internet service providers owned by Liberty Global all experienced Internet disruptions on April 27. Some of the most significant disruptions were seen on Virgin Media in the United Kingdom – in a somewhat unusual pattern, the disruptions occurred every hour or two over a course of eight hours, starting around 16:00 GMT. The Oracle and CAIDA graphs below show a clear declines in active probing measurements to endpoints with the Virgin Media network, indicating that traffic could not get into the network. The drop in the CAIDA Darknet metric and the dips in the Cloudflare traffic graph show that traffic was not getting out of the Virgin Media network either.

Oracle Internet Intelligence Traffic Shifts graph for AS5089 (Virgin Media), April 27

CAIDA IODA graph for AS5089 (Virgin Media), April 27

Fing Internet Alerts graph for AS5089 (Virgin Media), April 27

DownDetector graph for Virgin Media, April 27

Cloudflare traffic graph for AS5089 (Virgin Media), April 27

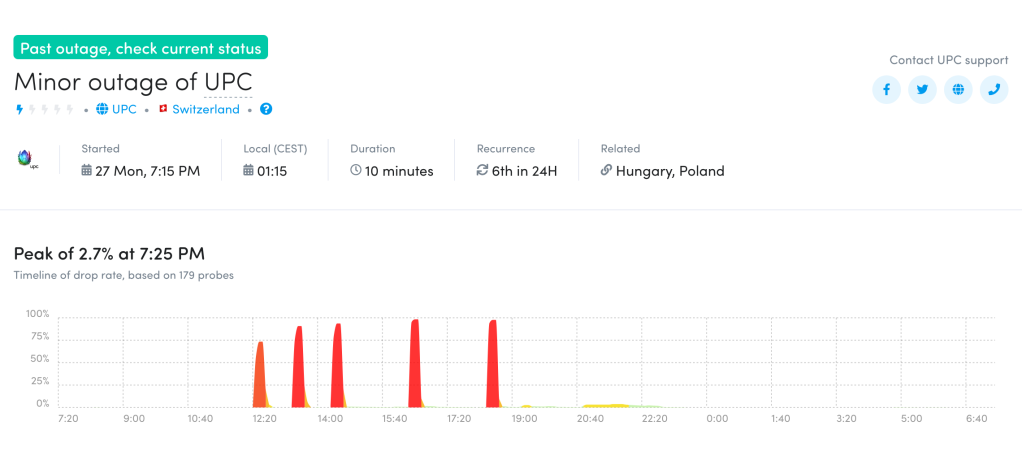

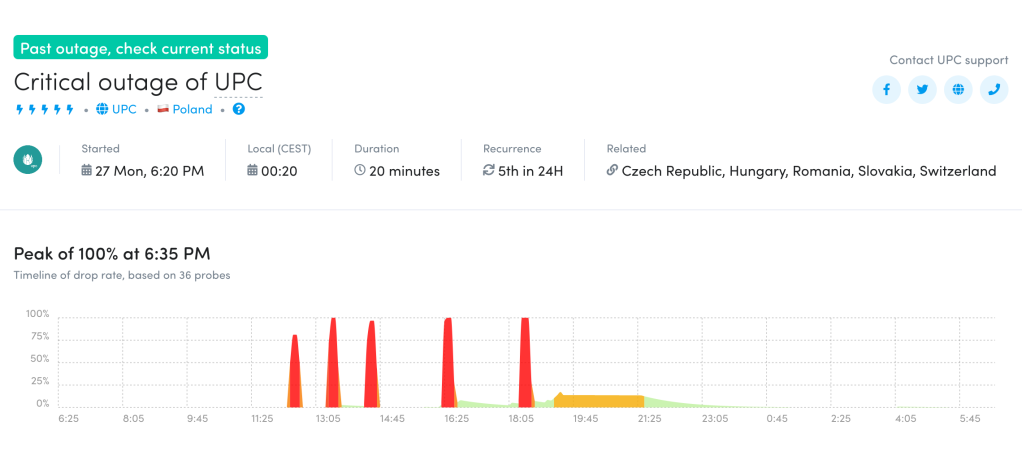

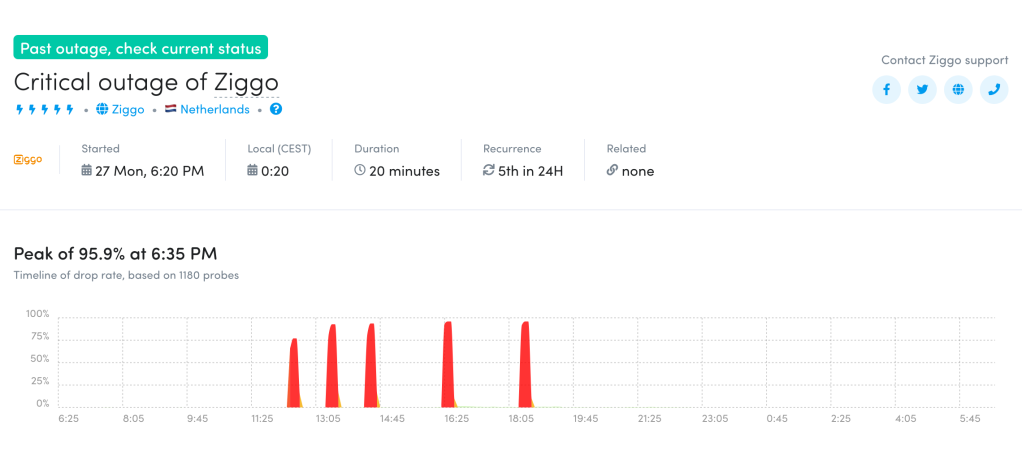

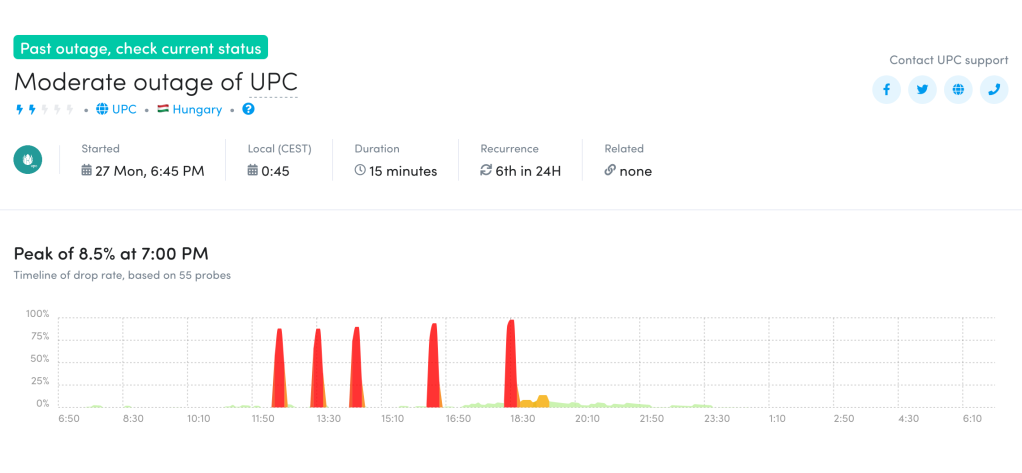

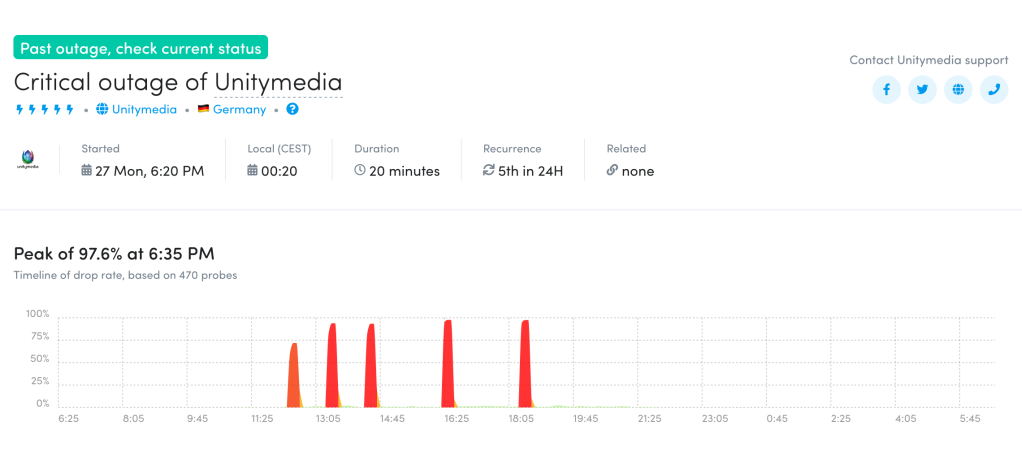

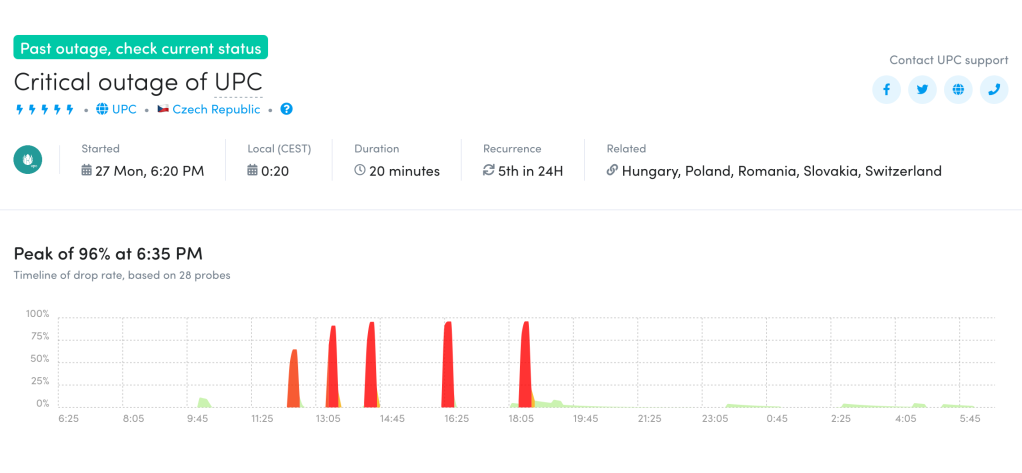

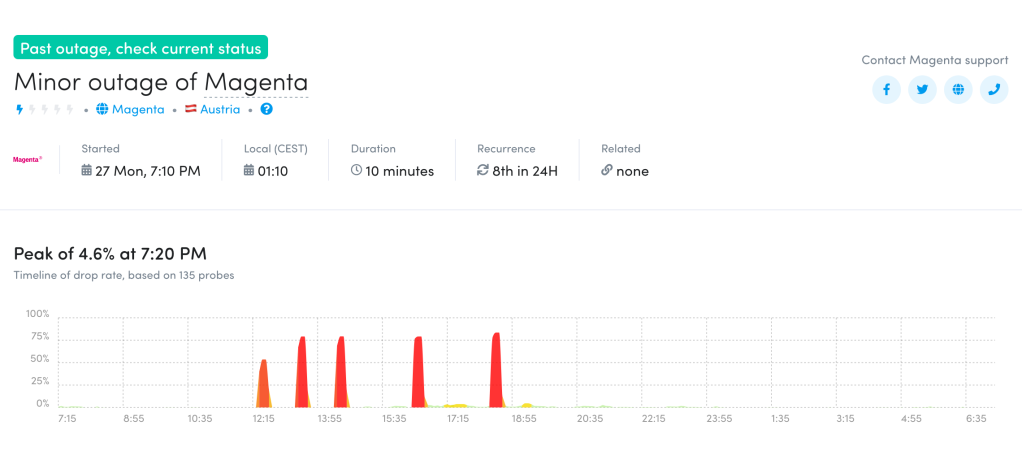

Other members of the Liberty Global family, including UPC (Switzerland), UPC (Poland), Ziggo (Netherlands), UPC (Hungary), Unity Media (Germany), UPC (Czech Republic), and Magenta (Austria) also experienced similar disruptions, as shown in the Fing Internet Alerts graphs below.

Fing Internet Alerts graph for UPC (Switzerland), April 27

Fing Internet Alerts graph for UPC (Poland), April 27

Fing Internet Alerts graph for Ziggo (Netherlands), April 27

Fing Internet Alerts graph for UPC (Hungary), April 27

Fing Internet Alerts graph for Unity Media (Germany), April 27

Fing Internet Alerts graph for UPC (Czech Republic), April 27

Fing Internet Alerts graph for Magenta (Austria), April 27

An article in industry publication ISPreview provided an overview of the problems experienced by customers of the impacted providers, a timeline of the disruptions, and some insights into the cause of the problems. The article cites research from Internet monitoring firm ThousandEyes that traces the outage to problems with AS6830, Liberty Global’s UPC broadband network. The article also quotes a Virgin Media spokesperson, who noted “Our investigation is still ongoing but we believe the issue to have been caused by a technical fault within our core network which resulted in intermittent connectivity issues.”

Unexplained

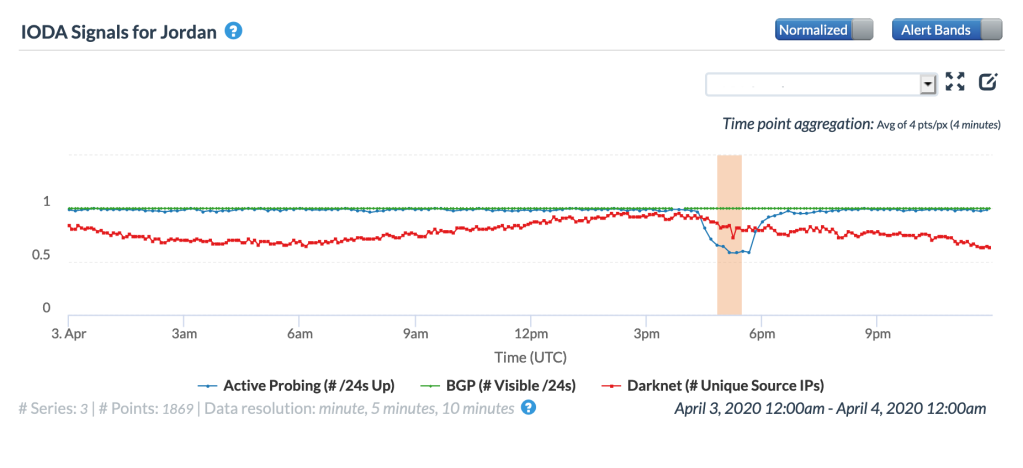

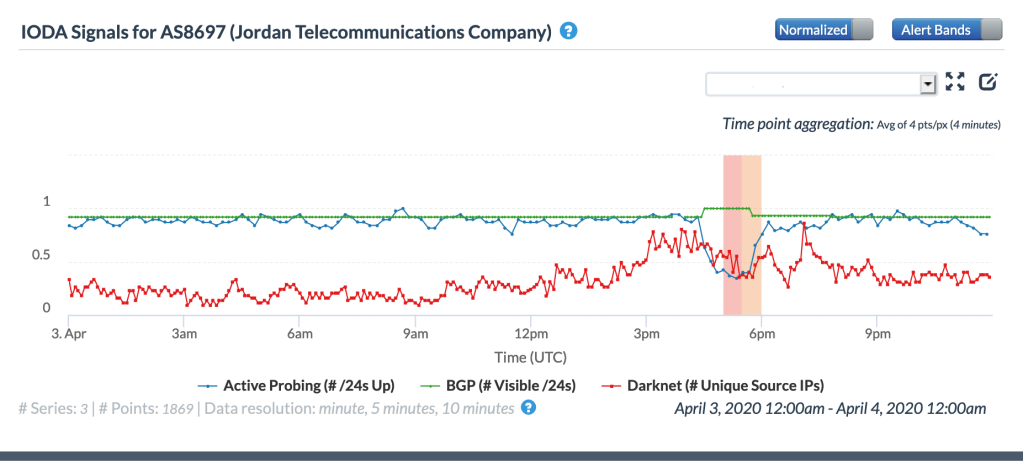

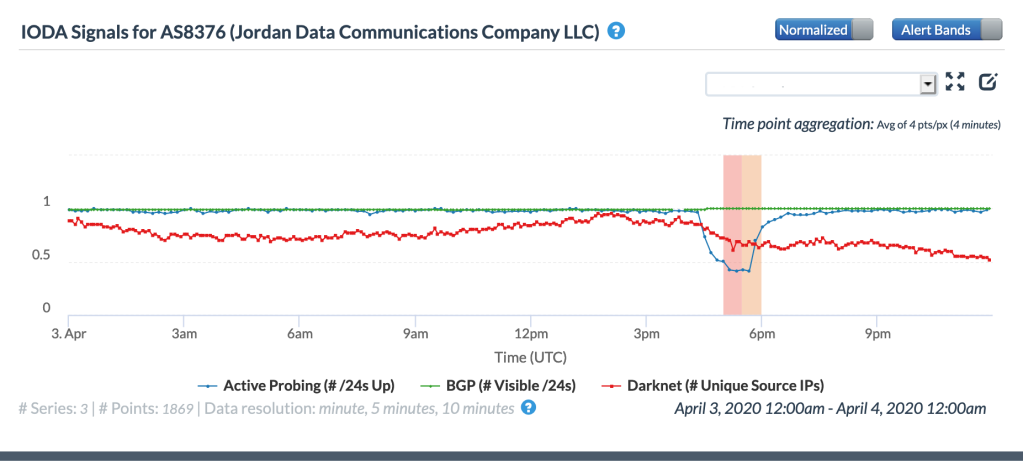

On April 3, a Netblocks Tweet highlighted a “nation-scale Internet outage in Jordan”, lasting for approximately 90 minutes as the country entered an initial COVID-19 lockdown. The outage is visible in the CAIDA IODA graphs below, at a national level, as well as within two major network providers within the country, with declines clearly evident in the active probing metric. Although the outage occurred during a 24-hour curfew, it isn’t clear what the root cause was, as routing did not appear to be impacted, and Darknet traffic continued to flow from the country.

CAIDA IODA graph for AS8697 (Jordan Telecommunications Company), April 3

CAIDA IODA graph for AS8376 (Jordan Data Communications Company), April 3

Conclusion

There was a noticeable absence of government-directed Internet disruptions in April. That is not to say that there were none, but those that did occur were not significant enough to be observed through publicly available tools.

The optimist would suggest that the pandemic has forced governments around the world to recognize the value of the Internet, leading them to seek alternate solutions where they would have once implemented a wide-scale Internet shutdown. However, the reality is that the underlying drivers of these shutdowns, including elections, protests, and exams, have largely not occurred due to the stay-at-home orders in place in most countries, and as such, government-directed Internet shutdowns in response to these events have not been necessary.

However, it remains our hope that governments not only recognize the value of the Internet for so many facets of our every day life, but that they also take steps to close the digital divides within their country, making Internet access available and affordable to all.